Vulnerabilities have been increasing in number year on year, precisely 34%, according to MITRE CVE statistics.

FIRST/CVE

There is no secret that the complexity of vulnerability for cloud and application security is increasing consistently.

The speed at which modern organizations are building applications and security teams are catching up to them is increasing.

I recently talked at the open security summit about how much pressure is increasing.

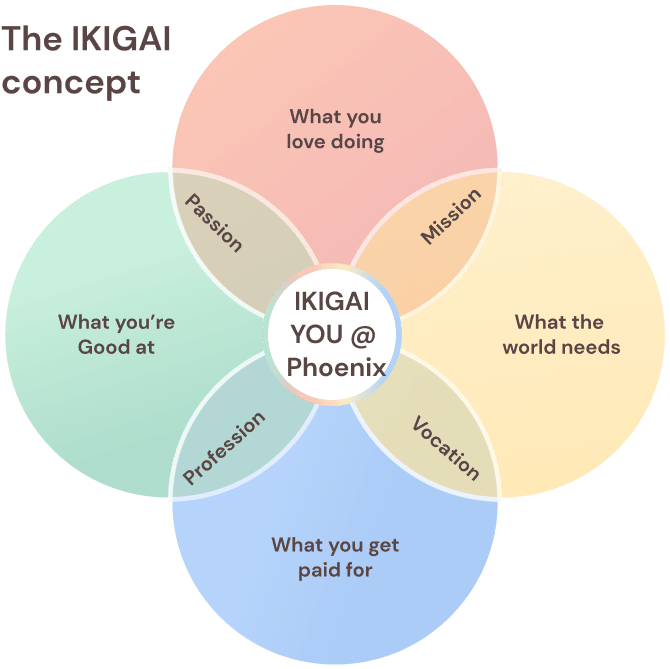

It comes as no shock that 54% of application security and cloud security professionals have considered changing jobs or industries in the last few years

(CxO online)

Increased Complexity in Application Security and Cloud security leads to more skill shortage.

Among those challenging times, there is a well-known acute cybersecurity shortage. On top of the shortage being in cloud security space and application security space.

It is no surprise since those two spaces are probably more complex to grasp for newcomers.

On the other hand, new vulnerabilities are getting published, and cloud and applications are increasing, with covid being the main driver of remote and digital assets.

96% of leaders report Covid-19 will accelerate their digital transformation by an average of 5.3 years

63% of leaders state that the Covid-19 pandemic prompted them to embrace digital transformation sooner than originally planned

(IBM)(Celerity)

And the numbers of those are going to increase consistently due to the increased workload additional complexity.

So what are the other factors of those shortages?

- Increase the number of vulnerabilities

- Increased complexity of applications and where they are deployed

- Incident response techniques are still very much manual and focus on antiquated playbooks

- Increased Complexity

- Decreased time to release

- New workforce and reshuffle

We recently wrote a whitepaper that expands on this problem, following some considerations on the subject https://phoenix.security/whitepapers-resources/whitepaper-vulnerability-management-in-application-cloud-security/.

Focus and balance on Vulnerabilities that matters most in Application Security and Cloud Security

What is the solution to all this?

Focus and balance

Focus on what’s exploitable and risk-based prioritization of vulnerabilities are the key to partially addressing skill shortage and reducing burnout. Helping an organization’s security team to scale betters enables them to dedicate more time to better new security professionals, convert and upskill developers, and prevent burnout by triage.

Ballance between what’s built and what’s fixed because fixing security issues at the end of a cycle requires 10X more effort than when new application security and cloud security vulnerabilities are discovered.

Context-based risk assessment – focusing on all the vulnerabilities published is simply impossible and not practical.

70% of developers skip security steps because there are simply too many vulnerabilities to fix, and only 10-14% Percent of those vulnerabilities do need attention right away

Fixing a vulnerability that is potentially bad on an external website or any server linked to an externally facing server is much more critical than one fully internal and does not host critical data.

Contextual Prioritization and Risk-based prioritization

Luckily there is a methodology that helps convey vulnerability “severity” in the contextual aspect

Risk = Probability (Likelihood of exploitation, Locality) * Severity * Impact

Contextual aspects are based on:

- The severity of a vulnerability – how much a 3rd party vendor has declared that vulnerability to potentially be dangerous

- Probability of exploitation – how likely is that vulnerability to be exploited

- The locality is a factor in the probability of exploitation

- Impact (also known as a factor of the Business Impact Assessment) communicate how much damage a vulnerability could cause to the organisation

Risk-based threat assessment is usually done by security professionals. Still, this result in an overwhelming job as the factors that need to be considered is simply too many and vary too quickly.

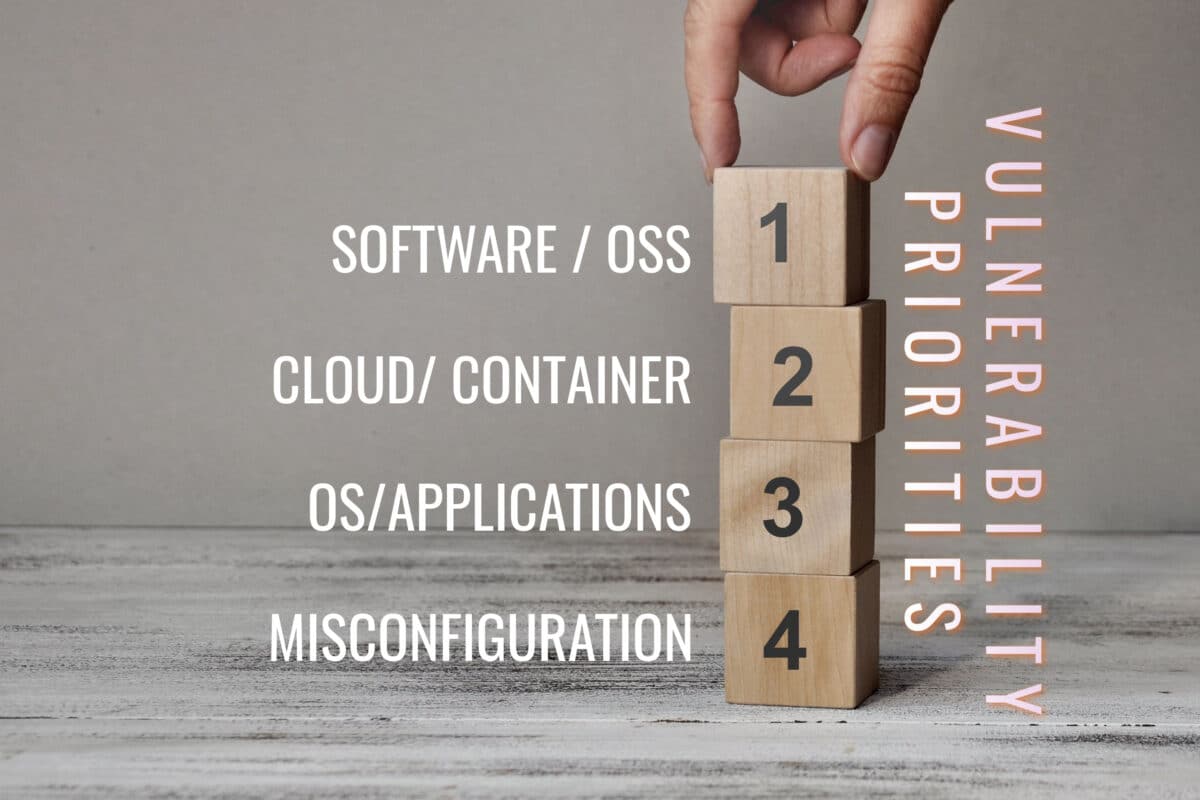

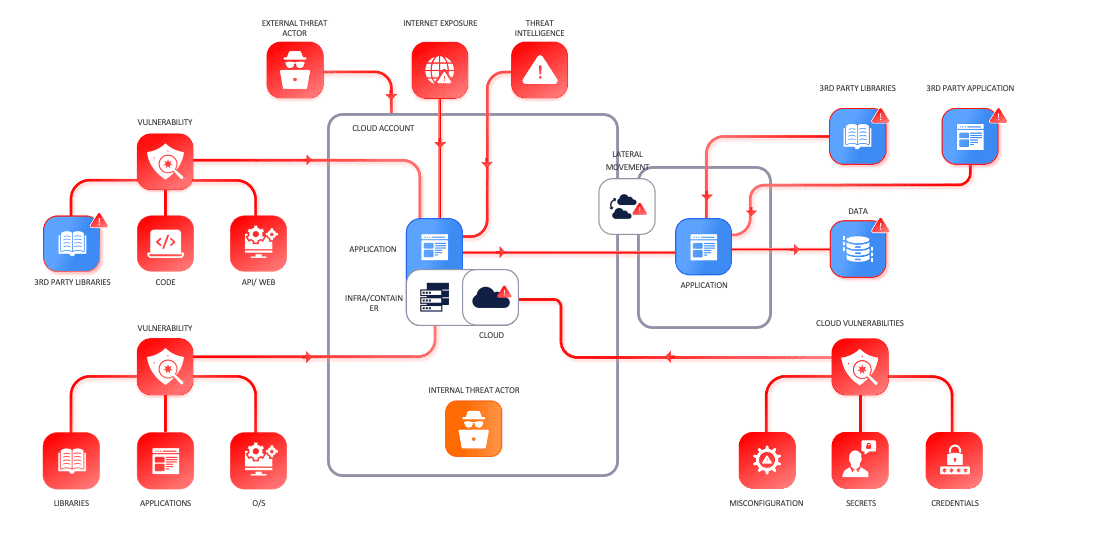

Following is a list of elements security professionals need to consider when triaging and deciding which vulnerabilities to fix first :

- How an application is being built

- Where it is deployed (which network, which environment)

- What kind of data does the application process

- How many of the components are external, Internal or connected to those

- What are the vulnerabilities of the code, libraries and API that the application is building

- Where are the encryption keys stored? Are there any misconfigurations in the storage system

- Is any of the systems where the application is being deployed vulnerable or has it become vulnerable

- Is any of the software in the system where the application is being deployed

- Is there any threat actor group targeting a specific vulnerability/system

- What is the blast radius if one of those components gets compromised

The complexity of this scenario increase when we consider the speed of deployment, where some of the environments get modified 100 times per day. Not all those deployments will lead to a potential compromise or misconfiguration, but some might.

Risk cannot be completely avoided. Organisations need to choose where to apply efforts to reduce risk. Cybersecurity risk management (RM) helps enterprises decide what systems and information to prioritise or tolerate.

Security professionals can’t be scaled infinitely, and more developers are being produced than security researchers.

Scaling security in a traditional organization is challenging. It requires automation and selection of vulnerabilities and misconfiguration to fix application security and cloud security that matches the development team’s speed of deployment and growth.

A solution that triages automatically and proposes what to mitigate needs to consider

Probability of exploitation

- The severity of a vulnerability (CVE, CWE, CVSS and CWSS)

- The locality of an asset, also known as Context

- Exploitability of a vulnerability based on the availability of Proof of concept or code snippet

- Probability of an attacker targeting the vulnerability

- Active exploitation of the vulnerability from threat actors groups

- Discussion on Twitter, Linkedin, Reddit and other forums

- How recent is the vulnerability (in the first 40 days, vulnerabilities are exploited/targeted more frequently)

Impact on system

- What data

- How many users

- How much revenue could be impacted

- Contractual impact

- Brand image damage

We recently wrote a whitepaper that expands on this problem https://phoenix.security/whitepapers-resources/whitepaper-vulnerability-management-in-application-cloud-security/

How to set targets

Service Level Objectives/Agreements are not a solution but an aid to setting targets for teams but can be an aid if there is nothing else.

Expanding on the subject here would be too extensive; we wrote several whitepapers and articles on the subject;

In conclusion, targets based on risk are much more precise and variable.

Additional Information is available in the article on SLA, SLA and OKR”

For more details on how to set metrics, there is another article that expands on the subject: https://phoenix.security/vulnerability-infrastructure-and-application-security-sla-slo-okr-do-they-matter/

We are publishing another whitepaper on SLA:

https://phoenix.security/whitepapers-resources/data-driven-vulnerability-managementre-sla-slo-dead/

Conclusion

Ultimately CVE and CVSS are a good starting point. Still, with only 10-14% being targeted at a particular time, the security and development teams need to focus on which is more likely to be exploited.

Freeing security professionals from data analysis and triaging enables them to focus on skill training of the development team, triaging the most critical vulnerabilities.

Security professionals can also spot interconnections between systems better than machines and correlate procedure violations.

Also, freer security professionals can propose mitigation strategies for vulnerabilities like compensating controls and upgrades of systems that normally no AI or tool would be able to determine.

On the flip side, technology is now much more capable of correlating a high number of data sources at scales and AI models like decision trees, neural networks, and Random Forest classifiers. Most importantly, Elastic-net models trained with gradient boosting can quickly digest a lot of data.

Prediction models based on technology like Phoenix Security can process trillions of data sources and dynamic context and convert them into risk. This frees security professionals and enables them to focus on the most important vulnerabilities.