Organisations must remain vigilant to safeguard their digital assets in the rapidly changing cybersecurity landscape. The EPSS model is perfect for vulnerability management in application, cloud and infrastructure security. Vulnerability management is a systematic and proactive approach to identifying, assessing, prioritizing, and mitigating vulnerabilities within an organization’s IT infrastructure, applications, and systems. EPSS provides one of the elements used in Cyber Risk quantification, a pivotal element in a vulnerability management scoring system.

The Forum of Incident Response and Security Teams (FIRST) plays a pivotal role by offering guidance and best practices for security incident response. A crucial initiative spearheaded by FIRST is the Exploit Prediction Scoring System (EPSS) Model, a framework designed to improve product security in a systematic and quantifiable manner. This blog article will explore the EPSS Model, its objectives, components, and how it leverages machine learning to predict vulnerability exploitation.

Overview of EPSS Model

The EPSS Model is a machine learning-based system that predicts the likelihood of a vulnerability being exploited within 30 days. It represents the third iteration of the model, incorporating lessons learned from previous versions to enhance the design and efficacy of the scoring system.

The EPSS model is particularly good for vulnerability management in application, cloud and infrastructure security.

Development and Features

The authors of the EPSS Model combined exploit data with community insights to predict vulnerabilities. The model’s prediction performance improved by 82% compared to its predecessor by utilising a diverse feature set and state-of-the-art machine learning techniques.

EPSS scores all vulnerabilities published on MITRE’s CVE List (and the National Vulnerability Database), significantly reducing the effort required to patch critical vulnerabilities compared to strategies based on CVSS.

Advantages of EPSS Model

One of the primary benefits of the EPSS Model is its open-source nature, allowing for widespread access, transparency, and community contributions. Using vulnerable data to make predictions provides more accurate results than scoring systems relying solely on severity ratings.

What data does EPSS Utilize?

The data used in this research is based on 192,035 published vulnerabilities (not marked as “REJECT” or “RESERVED”) listed in MITRE’s

Common Vulnerabilities and Exposures (CVE) list through December 31, 2022. According to the latest paper

The EPSS model in details

The Enhanced Product Security Standard (EPSS) Model predicts the likelihood of a vulnerability being exploited within 30 days. Although we don’t have access to the detailed inner workings of this specific model, following an overview on how the model works

- Data collection and preprocessing: The first step in building a machine learning model is to gather relevant data. For the EPSS Model, this includes data on known vulnerabilities, associated exploits, and other factors that may influence exploitability. Data sources can include the MITRE CVE List, the National Vulnerability Database, and various threat intelligence feeds. This data is then preprocessed and cleaned to ensure it is suitable for training the model.

- Feature engineering: The next step is identifying and extracting relevant features from the collected data. Features are individual measurable properties or characteristics of the vulnerabilities that can help the model predict exploit likelihood. Examples of features include the age of the vulnerability, the affected software or hardware, the type of vulnerability, the complexity of the exploit, and the availability of exploit code.

- Model selection and training: Once the features are identified and extracted, an appropriate machine learning algorithm is selected based on the problem and data characteristics. The EPSS Model likely utilizes a supervised learning algorithm, where the model is trained on a labelled dataset with known outcomes (whether a vulnerability was exploited or not). The training process involves adjusting the model’s parameters to minimize prediction errors.

- Model evaluation and validation: After training the model, its performance must be evaluated using a separate dataset that was not part of the training process. Common evaluation metrics for classification problems include accuracy, precision, recall, and F1 score. The model might also be validated using techniques such as cross-validation or bootstrapping to ensure its reliability and robustness.

- Prediction and scoring: Once the model is trained and validated, it can be used to predict the likelihood of exploitation for new, previously unseen vulnerabilities. The model assigns a score to each vulnerability, with higher scores indicating a higher probability of being exploited within the next 30 days.

- Continuous improvement: As new vulnerability and exploit data becomes available, the model must be updated and retrained to maintain accuracy and relevance. This can involve updating the feature set, refining the model’s parameters, or changing the underlying machine-learning algorithm if necessary.

The specific details of the EPSS Model, such as the exact features used and the machine learning algorithm employed, might be private. There are several whitepapers on the subject to disclose and clarify the sources.

Challenges and Limitations

Despite its advantages, the EPSS Model has some limitations you must consider when considering it in your vulnerability management program. Vulnerability scores can change rapidly as new information emerges, requiring organisations to continuously monitor their systems and update patching priorities. Furthermore, accurately forecasting real-time information remains complex due to the ever-evolving nature of cyber threats.

The model’s accuracy (precision) and coverage (Recall) have improved. There are some limitations like real timeliness and availability of vulnerabilities declaration (usually available quite a long time after a vulnerability is exploited). Nonetheless, when there are a sea of vulnerabilities to fix EPSS proves a very powerful tool for prioritizing vulnerabilities.

Precision

The algorithm’s precision is a measure (on a finite subset of vulnerabilities) of the accuracy of a threat intelligence system or the percentage of correctly identified threats out of all the threats identified by the system.

For example, if a threat intelligence system identified 100 threats, 95 of them were threats, the system’s precision would be 95%.

Recall

This represents the completeness of a threat intelligence system or the percentage of actual threats identified by the system out of all the present threats. For example, if there were 100 threats present, and the threat intelligence system identified 95 of them, the system’s recall would be 95%.

Caveat

On some vulnerabilities, EPSS performs well in 1-2 weeks as the system stabilises a prediction score but might fail to raise the alarm immediately. Most exploited vulnerabilities will have most of their published exploits a month after publication. Few cases and examples if you consider using EPSS in vulnerability management program

EPSS scores are constantly changing, especially after initial exploitation

- Log4shell CVE-2021-44228 only reached a score of over 50% after four days, and its final score of 96% after a full month.

- CVE-2022-22954 started with 66.9% on the date of publication. A week later, EPSS dropped the score to 32.6% with no exploit found in the wild. However, an exploit was published almost a month after publication, and EPSS raised the score to 93%.

In those cases, EPSS can be compensated or replaced by more real-time cyber threat intelligence and alerting from various providers. In situations like this, First’s documentation for EPSS advises:

If there is evidence that a vulnerability is being exploited, then that information should supersede anything EPSS says.

On the other hand, the vulnerability score and probability of exploitation can vary over time. Using EPSS directly against CVSS can lead to variable risk.

We recommend using a weighted value of EPSS against CVSS to compensate to the sharp variation of EPSS scores.

Modern Cyber Risk Quantification.

Modern cyber risk quantification has evolved to rely on many weighted and time-bound factors to assess the probability of exploitation accurately, the dangerousness of an exploit, and the impact it has on an application based on its deployment context. The probability of exploitation is influenced by the location of the asset, where the vulnerability is present, the likelihood of exploitation, the dangerousness of the exploit, the availability of the exploit, and its presence in popular repositories. The base potential dangerousness of an exploit is generally conveyed through CVSS/CWSS scores. The overall impact of a vulnerability considers the number of users affected, the organization’s resilience to an application being compromised, the volume and nature of data at risk (e.g., sensitive or critical), and the level of privacy associated with the data within the application. By considering these multifaceted factors, modern cyber risk quantification enables organizations to make informed decisions about their security posture and prioritize remediation efforts effectively.

Integrating Contextual Cyber risk quantification in a modern vulnerability management program

Integrating contextual cyber risk quantification into vulnerability management is essential for organizations to prioritize remediation efforts effectively and allocate resources optimally. By considering the unique context of each vulnerability, including factors such as the criticality of the affected asset, the potential impact on the business, the likelihood of exploitation, and the organization’s overall risk tolerance, a more accurate and nuanced assessment of risk can be achieved. This context-aware approach to vulnerability management enables organizations to address the most pressing vulnerabilities first, ensuring that limited resources are utilized efficiently while providing the best possible protection against cyber threats. Ultimately, contextual cyber risk quantification enhances the decision-making process in vulnerability management, empowering organizations to make informed choices and improve their cybersecurity posture proactively.

A modern cyber risk score would rely on the following factors

- Probability of exploitation – how likely is an exploit to manifest? This information can be derived from a mix of

- Cyber threat intelligence like EPSS, Vulnerability real-time cyber threat intelligence

- Location – where an asset is located in the network

- Context – how is the system connected and deployed, and what other vulnerabilities are deployed in the environment where the asset is deployed

- Dangerousness of an exploit – this is usually described well by the CVSS or CWSS base vulnerability information

- Public availability of an exploit for the vulnerability

- Impact analysis – this describes the vulnerabilities impact in the application/environment where it manifests, for example, how much downtime a vulnerability exploitation could cause

An example of those factors:

- The Probability of exploitation can be derived from

- Location of an asset (internal/ external) where the vulnerability is manifested

- Likelihood of exploitation

- EPSS and the likelihood of exploitation

- Cyber threat intelligence like VulnDB, CISA KEV, Local Advisories

- Dangerousness of the exploit

- Is the exploit a Remote Code Execution (RCE)

- Is there an exploit available for the vulnerability?

- Is the exploit module automated / readily available in exploitdb, github, nuclei, and metasploit

- The base danger of the exploit is generally communicated by CVSS/ CWSS score

- The impact of a vulnerability overall

- How many users it impacts

- How much can the organization survive with an application compromised

- How much data is there to compromise

- How private is the data in the application (sensitive, critical etc…)

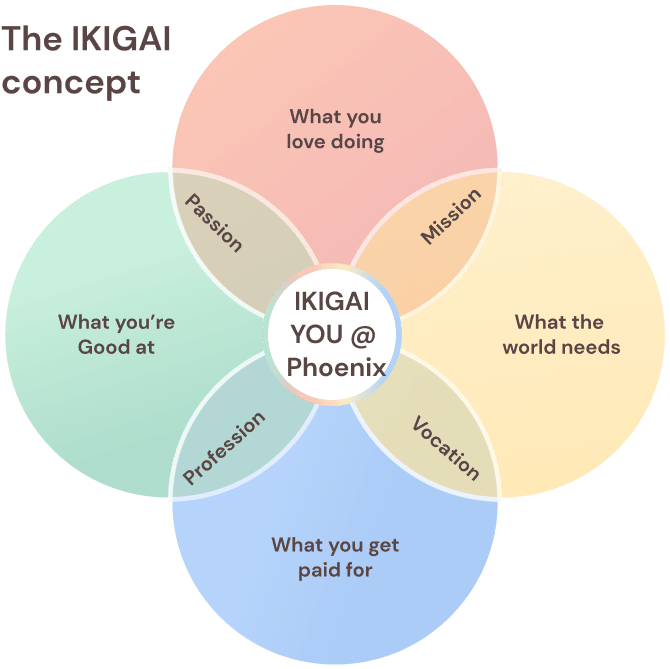

Phoenix cyber risk Scoring

Phoenix uses EPSS to predict the vulnerabilities, the location of assets, the impact it can cause, and compensating controls.

Each variable is introduced with controlled weights and time windows to control a sharp increase or decrease in the risk scoring (unless all the variables change sharply, the event happens with a rarity of 0.09%).

If using EPSS in your system, we recommend using a weighted value of EPSS against CVSS to compensate for the sharp variation of EPSS scores.

Phoenix Security Platform integrates and provides EPSS scoring in the risk quantification system.

In case you want to rely on real-time information, the phoenix security platform can provide connections to more real-time scoring like vulnerability db, vulndb, recorded futures and other integrations.

Conclusion

The EPSS Model is invaluable for organizations prioritising patching efforts based on vulnerability exploitation likelihood. Although some disadvantages exist, its open-source approach and reliance on vulnerable data make it a reliable option for many organizations. By implementing the EPSS Model, organizations can enhance their cybersecurity measures and protect their digital assets more effectively.

Phoenix Perspective

Relying on cvss and only on severity is a receipt for burnout. Phoenix security is here to help with automated prioritization and contextualization. Phoenix uses a combination of scoring, prioritization, selection and alerting that enables the team to focus on the 10% of vulnerabilities that matters in the context of the organization

Leveraging the power of EPSS and other cyber threat intelligence without the need of configuration, you can focus on 10% of the vulnerabilities that really matter.

Phoenix security also integrates with Cyber threat intelligence providers like CISA, alerting when a vulnerability appears in the Known Exploit Vulnerability database (CISA-KEV)

In conclusion, leveraging on EPSS together with other factors enables the cyber security team and development team to focus on cyber risk quantification and, what’s more essential and prevents burnout.