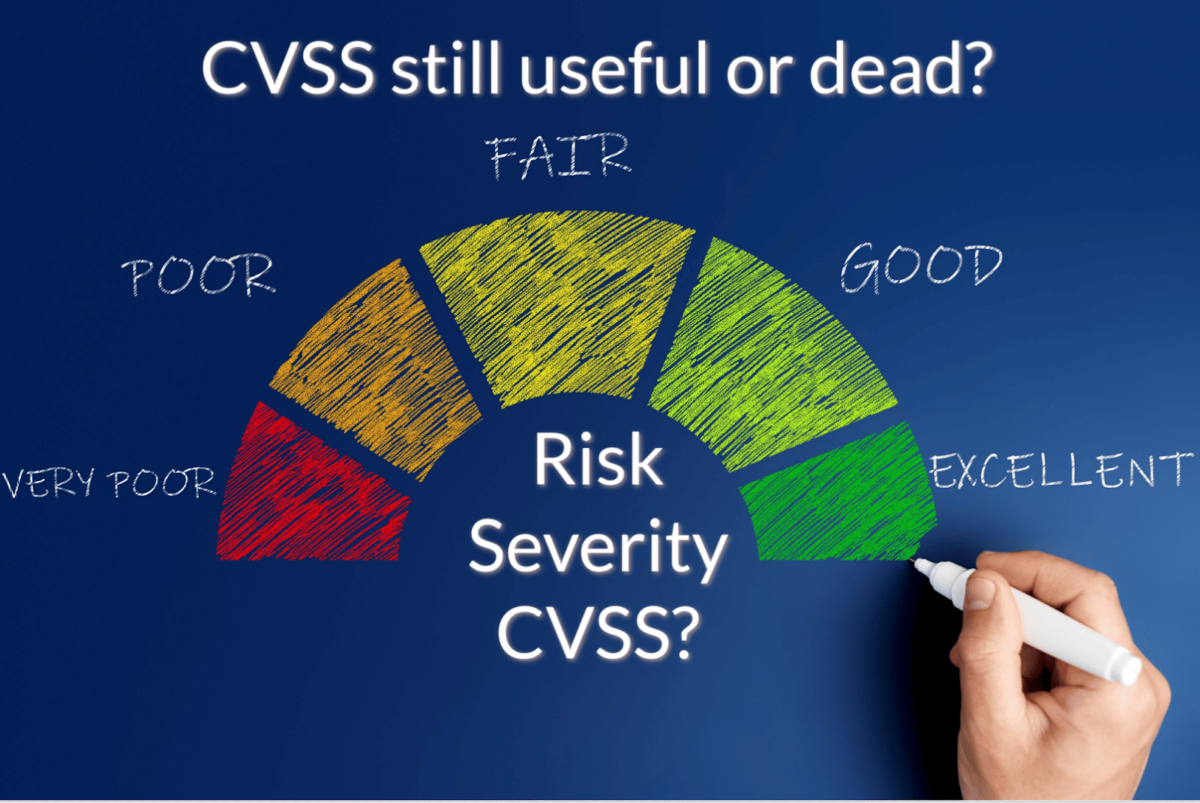

Context is king, and we need a new vulnerability-scoring system

CVSS does not fit anymore Risk and Context are kings for vulnerability management

Context is king, and we need a new vulnerability-scoring system

CVE CVSS vulnerability in the application, cloud security and prioritization

To cvss or not cvss, that is the question.

The community has a vast and healthy debate on what scoring should measure, what to fix, and when.

CVE/CWE historically served a purpose and a function: offering a severity-based approach to score and decide what vulnerability to fix first.

This was a good initial approach, but with the vulnerabilities multiplying and only a handful of vulnerabilities being exploitable, CVSS has served its purpose.

Shall we completely trash CVSS? Not really; it serves a purpose but is a beginning of a journey towards a higher maturity -> risk score is the higher maturity level.

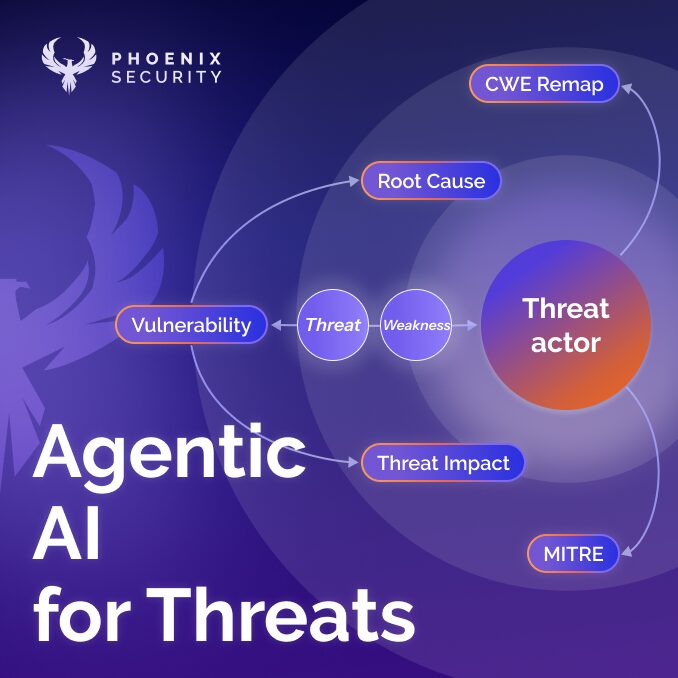

The next generation of risk

Risk formulas need to take into consideration several things

- The probability of an event happening

- Impact on the organization

- How dangerous is the vulnerability (that can be served based on severity)

Now the situation is more complex with the probability of an event happening because it is driven by

- Location in the network, also known as context or locality, of a vulnerability and the assets it affects

- Number of times it gets exploited in the wild

- if it is easily exploitable (exploit complexity)

- If it is easy to retrieve an exploit

- Other Cyber threat intelligence factors

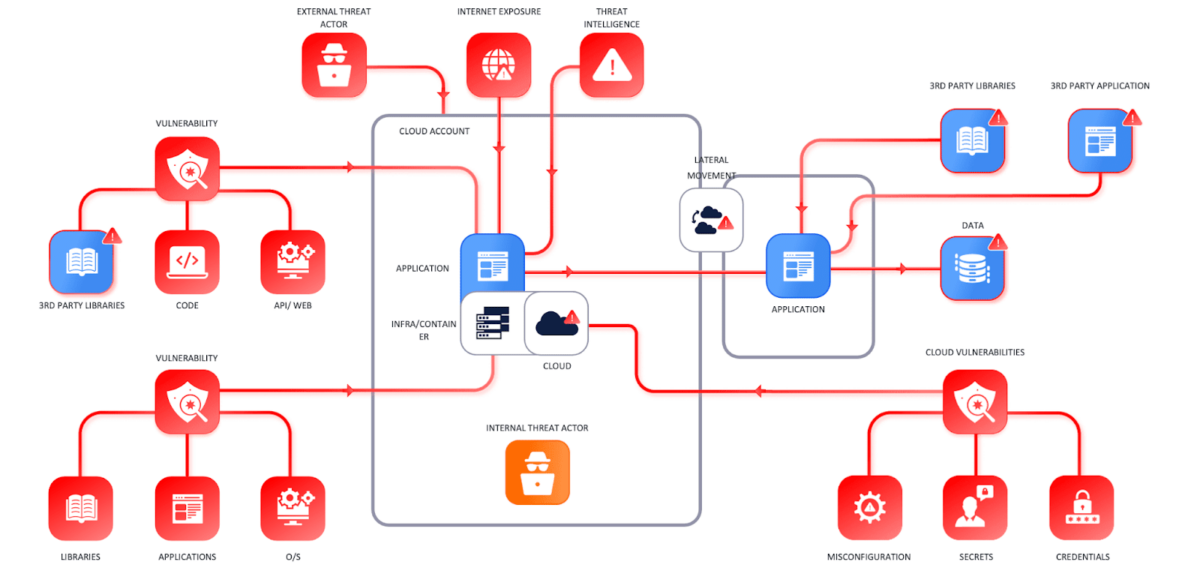

Context is king

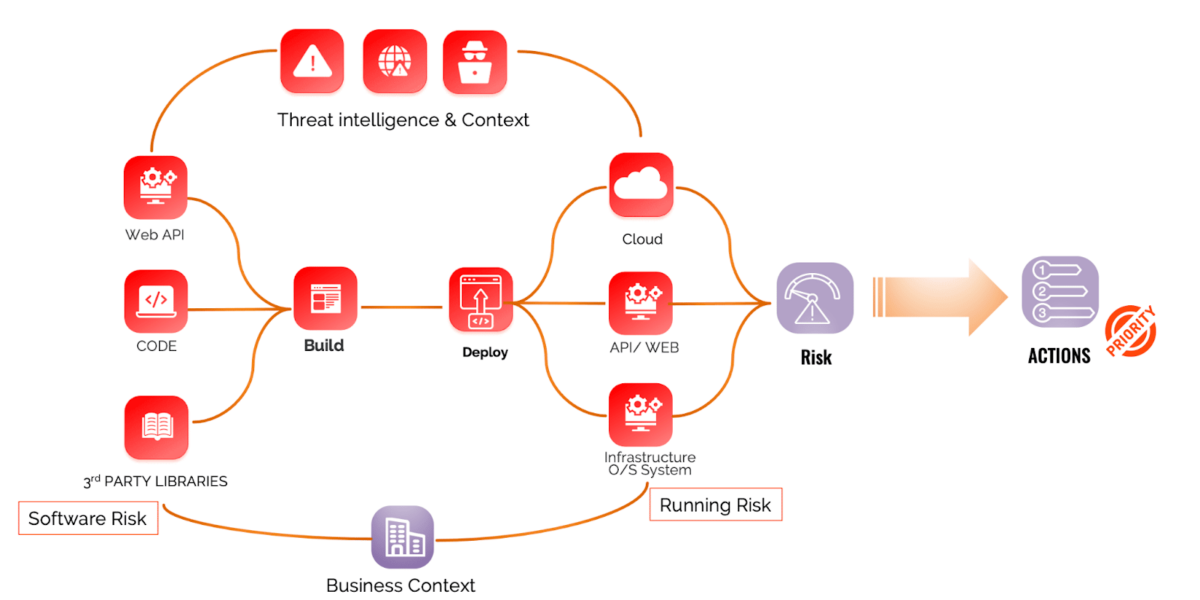

The context for cloud security and application security is usually an invisible problem until we start looking at the end receiver of tickets.

What’s to fix first? A misconfiguration, a vulnerability in code, library, or Operating system?

How many vulnerabilities are there every day?

Moving from a chaotic context in cloud and application security, where vulnerabilities pop up all the time, security team screams, and Threat actor target vulnerabilities, into a more streamlined approach, is the way.

Context remains one of the biggest drivers of whether a vulnerability is exploitable. Nonetheless, more regulation is coming up that drives the exploitability of a vulnerability (namely CISA-KEV).

This is a good first attempt but could lead to blanket resolution instead of smart resolution.

What is a smart resolution?

To be smart, a resolution needs to identify.

- Where does an asset live in the network( Externally facing, internally, DMZ, does it have compensating controls)

- How much damage (direct and indirect) could the asset exploitation cause to the organization, or more simply, how critical is the asset

- What is the blast radius, or what other assets are interconnected to this asset?

- How easy is a vulnerability to exploit the vulnerability: is an exploit available, and is the vulnerability easy to access? Can an exploit be triggered with ease?

- Is a misconfiguration an easy exploit for your cloud?

- How frequently does an exploit being used in the wild? Is this exploit being discussed on Twitter, LinkedIn, and social media?

Putting It All Together: Context Is King but not the only factor that matters.

Enriching asset and vulnerability context increase visibility. This allows IT security decision-makers the information needed to prioritize security activities for application security or cloud security while maintaining strong assurances. Without risk context, any approach is a sledgehammer one; an arbitrary and ad-hoc security strategy is not a strategy, and this type of approach increases risk. On the other hand, data-driven decision-making can provide quantified risk scores that are actionable, allowing a company to use a more surgical style approach; calculate contextual risk, prioritize activities, apply resources strategically based on business impact and data-driven probabilities, reduce anxiety and prevent an overwhelmed feeling of unmanageable issues while also providing high-degree security assurance.

Although the number of CVEs published each year has been climbing, placing a burden on analysts to determine the severity and recalculate risk to IT infrastructure, enriching a CVE’s context offers an opportunity to increase IT security team efficiency. For example, in 2021, there were 28,695 vulnerabilities disclosed, but only roughly 4,100 were exploitable, meaning that only 10-15% presented an immediate potential risk. Having this degree of insight offers clear leverage, but how can this degree of insight be gained?

Just calculating august 2311 vulnerabilities, across allocation and cloud security, were published, 37% were exploitable, 21% had public exploitation, and 5%-7%10% were important to look at depending on active exploitation at a specific time (EPSS and other honeynet data) or location/ business criticality values.

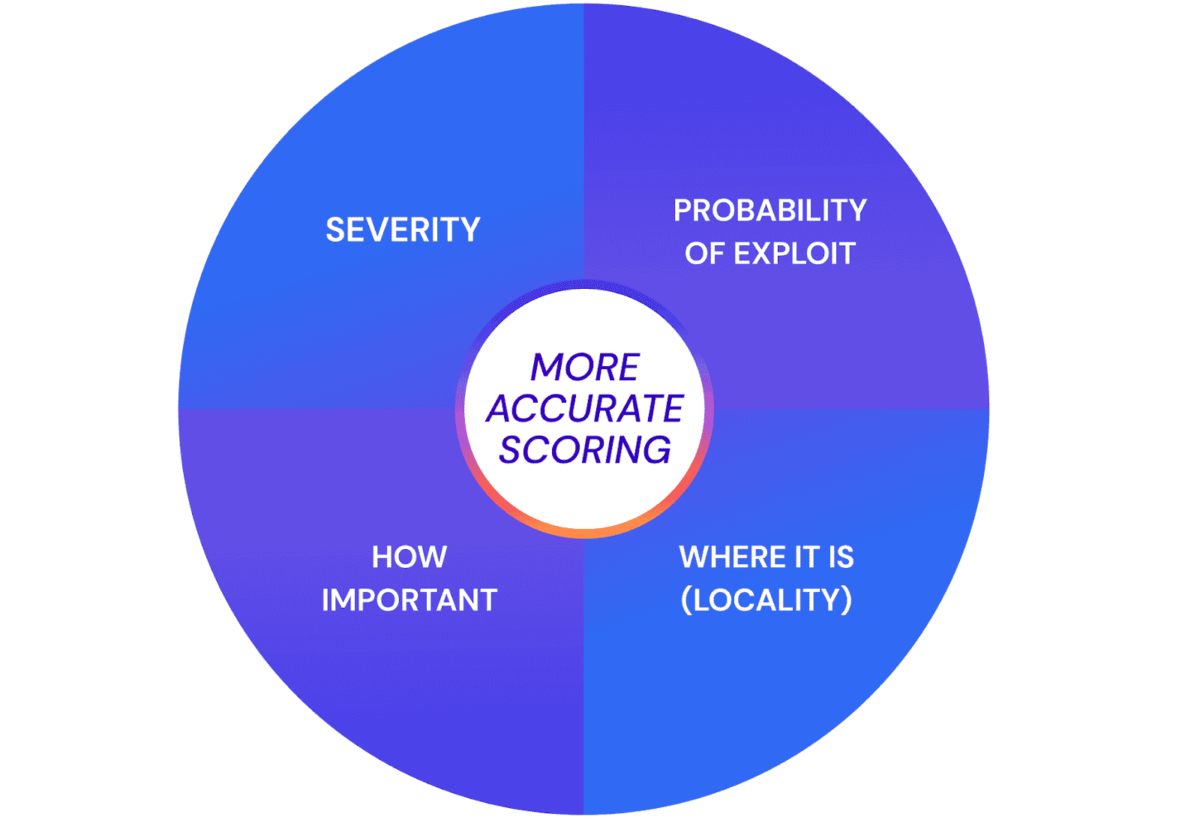

Context is king, Prioritization-Priority is queen, and importance/severity are the kingdom’s knights.

Take your kingdom, your organization, and who defends it.

CVSS severity, for now, referred to as severity, is the most basic pawn in prioritization of the vulnerability, hence the first knight in your army.

Business importance, referred to as impact, is a bit more advanced in prioritization of the vulnerability, hence the second knight in your army.

Prioritization and probability of exploitation are queens – lots of data needs to be consolidated and leveraged. Still, it is a second strong, powerful defence system to know what to fix first.

Context and locality remain the major driver and kings of an army and are used to give directions and to know who to follow and raise the issue with. Context is the key strategic piece in your defence.

Let’s dive a bit deeper into each one of them.

When we revisit the most basic method of calculating risk, we see that it combines impact and probability. Although this makes sense, it is only a high-level perspective and useless in practical situations. The business impact, operational criticality, inherent value, and technical locality or context of all IT systems and information needs to be combined with Cyber Threat Intelligence data that describes the state of the current threat environment. More detailed and better-quality inputs accomplish a more accurate contextual risk score from a particular asset or CVE vulnerability perspective. These inputs are discussed below.

The King – The location or CONTEXT of the asset

In cloud and application security, the locality or context measures an asset’s technical specification and placement in the network topography on-premises or in the cloud. A system is more likely to get exposed to attack if is WAN (Wide area network) or externally facing vs internally.

Things get a bit more complicated with compensating controls, interconnected systems and another lateral movement, but for the purposes of this article, we’ll skip this.

The probability of exploitation directly relates to the externally facing system/ API, web application.

Another factor that influences the locality directly related to the probability of exploitation is the type of vulnerability.

Usually, vulnerabilities that can be exploited by calling a URL or any other web service (in the Remote Code Execution category) are much more likely to get exploited by others.

This statement, of course, does not apply to all vulnerabilities, as we have seen a recent example of open-source libraries being used at large in exploitation campaigns.

Nonetheless, if we take the recent example of log4j, spring4shell, and proxy-no-shell, the recent Microsoft exchange vulnerability, they are all remote code execution vulnerabilities.

For more details, we wrote another post on the subject

The Queen – The probability of exploitation

Exploitability measures how much opportunity a vulnerability offers an attacker. Probability depends on the exploitability of a particular vulnerability and is directly impacted by several main factors, including, but not limited to, the level of skill required to conduct an attack (known as the complexity of an attack), the availability of PoC code or fully mature malicious exploit code for a particular vulnerability, and whether the vendor of the vulnerable software has issued a security update to fix the security gap. Exploitability can also be enriched with CTI from social media and dark-web reconnaissance. All of these factors impact the greater context of risk.

Suppose fully mature malicious exploit code is available to the general public and can be executed by a low-skilled attacker, the probability of exploitation in the wild increases significantly. On the other hand, if a security patch has been made available by the vendor and remediation can be done quickly, the priority of the vulnerability can be elevated because a simple procedure offers a high degree of protection.

The Knight – How important the asset is

Importance measures the potential costs to an organization should an asset be breached and how valuable an attacker may see an asset. Value is directly correlated to the level of risk assurances required for an asset to be considered secure and, therefore, its level of priority in security planning.

Systems and information may be critical to an organization if they are directly related to revenue-generating activities or host sensitive data protected by national or regional legal regulations such as GDPR or HIPPA or an industry standard such as PCI-DSS. Assets may also be considered especially important if they are significantly costly to replace or refresh to an operational state.

The Knight – The severity of a vulnerability

Severity measures how much damage can be done to a target system. Regarding prioritization, a vulnerability that allows remote code execution (RCE) without user interaction at admin level system privileges is a very high severity. However, the vulnerability is contextually less severe if the system contains not have highly sensitive data and is on a segmented network without a public attack surface. If, on the other hand, the vulnerability affects a public-facing system that contains private customer user data, it should be prioritized with a higher score.

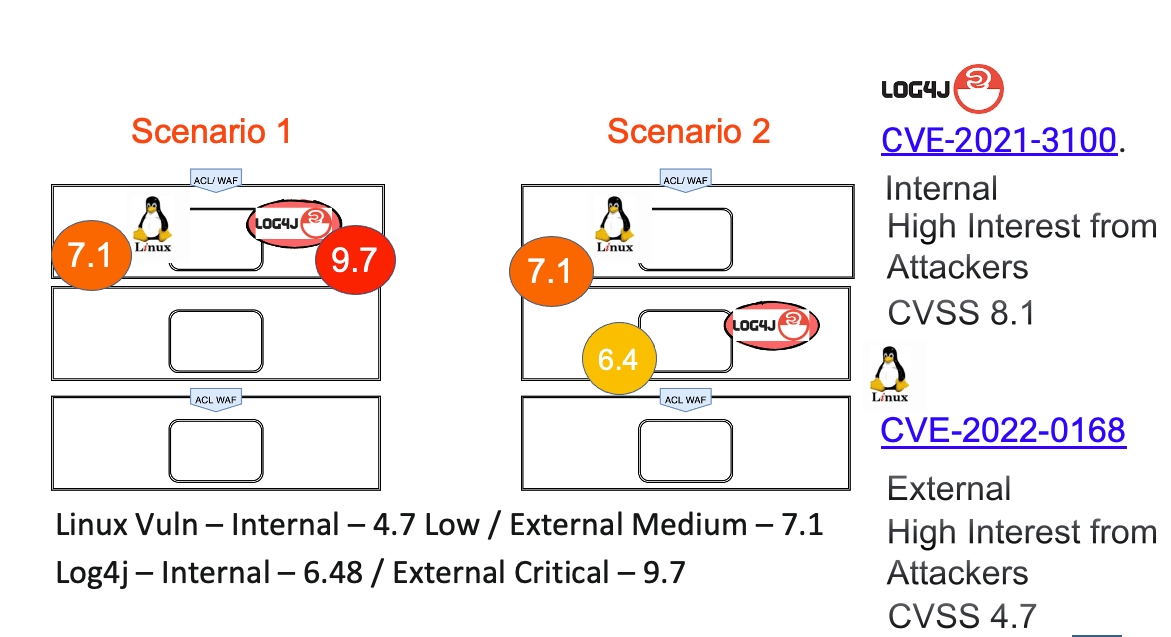

Scenario 1 – Context and King – Checkmate – How Risk Depends On Context For Two Vulnerabilities

The image above shows the locality topography for a network that contains both CVE-2021-3100 and CVE-2022-0168

For this case, we will compare CVE-2021-3100 (known as Log4J) and CVE-2022-0168 to show how the locality of a vulnerability impacts its relative risk. For example, two vulnerabilities result in different final prioritization scores depending on whether they are contained in an internal or external facing system.

Log4J is a remote code execution (RCE) vulnerability that affects Apache Log4j2 versions from 2.0-beta7 to 2.17. 0 on any OS (Windows, Linux, macOS) and can allow a remote attacker to execute arbitrary code on the target server. The exploitability of Log4J depends on having a vulnerable version installed and using it to log unsanitized input that comes from an external source such as a username, HTTP headers, or other incoming data. Log4J has a CVSS severity score of 8.1. CVE-2021-3100 is a denial of service (DOS) vulnerability that affects Linux-based systems. It allows an attacker to disable an affected system resulting in downtime and has a CVSS score of 4.7.

Considering the locality context of the vulnerabilities as they exist on a network (shown in Figure 6), we see that in Diagram 6 scenario 1 on the left, the Log4J CVSS severity score is increased to a relative contextual risk score of 9.7 and the Linux DOS vulnerability increased to 6.1. Both vulnerabilities have increased their overall risk score because they are publicly exposed.

Diagram 6 Scenario 2 on the right shows a modified network environment where the Log4J vulnerability is no longer on a public-facing system. The Log4J vulnerability is only used for an enterprise’s internal application. The locality context reduces the relative severity of Log4J because it does not accept data from a public source that could be crafted to exploit the vulnerability. Because the Linux DOS vulnerability is still on a public-facing system, the two vulnerabilities are not of comparable priority.

The King – Leveraging Business Criticality to prioritize, An Example

For this case, we will again evaluate CVE-2021-3100 (known as Log4J) and compare the contextual risk scores of two independent systems with different levels of business criticality. In Figure 7 below, you can see that the asset represented on the left has lower importance to the business than the set on the right, which contains personally identifiable information (PII), but they are both impacted by Log4J.

The input factors for calculating how important an asset is include:

- Incoming revenue that depends on the asset

- Potential long-term impact on the brand (reputational damage) if the asset were compromised

- Number of users affected by a downtime (brand and customer impact)

- Potential regulatory fines, contractual fines, or other compliance consequences as a result of downtime or a data breach

Since the systems have different degrees of business criticality, an evaluation of Log4J’s ultimate risk score across the two systems results in a different weighted adjustment of the base CVSS severity score of 8.1. In Diagram 7 (Scenario 1) on the left, the Log4J CVSS severity score is decreased to a relative contextual risk score of 6.48 and further reduced due to the non-criticality of the system to a 5.1. However, on the system that contains PII, the CVSS severity score is increased to 9.7 critical.

Image showing the locality topography for a network that contains both CVE-2021-3100 and CVE-2022-0168

For more details on this subject: https://staging-appsecphoenixcom.kinsta.cloud/whitepapers-resources/whitepaper-vulnerability-management-in-application-cloud-security/

The criticality element and a simple quantification example with impact

We have two assets: with the same vulnerability; let’s assume log4j

System A – One asset is on an internet facing, system generating 80% of the annualized transactions for an organization producing an annual 1.2 M annualized return

System B – asset is internal facing, not connected to the first asset and serving information to internal users on the cafeteria’s menu. This system has no revenue generating but for the purpose of this exercise, let’s assume the system has 30K revenue generating value in time saved from people asking what’s the menu

Let’s assume that vulnerability exploitation takes down a system for 2h; the first system generates an hourly revenue of (1.2 * 0.8)/ 8760 = 109.58

The system b generates an hourly revenue of 2.73

So in total 2h of downtime causes a 219.16 primary impact. This also has a much more marginal impact that is ephemeral to calculate (brand damage, fines etc.…)

For the purpose of this analysis, we’ll leave out the secondary impact, but a good analysis is FAIR –

If the two systems were considered equal, then the two log4j currently hold a CVSS score of 10 and has remained so regardless of the criticality or any other factor (https://nvd.nist.gov/vuln/detail/CVE-2021-44228) .

New risk formula for the future

Context, A risk formula must account for the probability of exploitation, impact and severity.

Following just speculation on risk formula and some insight on the thinking behind prioritization, and is meant to stimulate a conversation around CVSS and the limitation it current suffers.

Risk for vulnerability = WS * CVSS(base) * WP * (PE) * WI * (IM)Where WS, WP, WI are modular weights to give more importance to impact or Probability

CVSS base is the base severity of the vulnerability

IM = impact score = a number from 0-10 on the impact factor

This impact factor can be refined further with more refined business impact analysis

- PE = is the probability of exploitation.

- PE should be calculated using:

PE = WC*CTI+WZ*Chatter+WZ*Zeroday+WC*EPSS+WEX*ExWhere WC, WZ, and WE are the weights for CTI, Chatter Zeroday and EPSS

- CTI is a value from 0-10 on how much the vulnerability is receiving attention

- Chatter is a statistical number from 0-10 on how much the vulnerability is getting exploited

- EX is a number from 0-10 on the exploitability factor (easiness of exploitation, availability of exploit and similar)

- EPSS is the EPSS factor a number from 0.01 to 1 on the probability of exploitation

Those exploitation probabilities are simple, and more regression testing or statistical probability factor can be used. Nonetheless, this proves that CVSS alone is no longer sufficient to calculate risk.

How can we be of help

Appsec phoenix is a saas platform that ingests security data from multiple tools, correlates, contextualises and precisely delivers the most important vulnerabilities in the development backlog

Calculating and prioritizing at-scale vulnerabilities, as you can see above, can be extremely complex, time-consuming, and lead to resource burnout.

Phoenix Security ingests data from appsec, vulnerability assessment and cloud tools, contextualising, collating, and prioritising, delivering an actionable risk-based view.

We enable security teams to deliver to the right owner actionable insight to fix Toady the problem exploited tomorrow without burning out doing triage.

If you are interested in applying the concept of prioritization, locality and automate raising the vulnerabilities that matter most https://staging-appsecphoenixcom.kinsta.cloud/demo

Other useful references:

For more information on exploitability factors: https://www.first.org/epss/

Data on EPSS: https://www.first.org/epss/data_stats

FAIR formula – https://www.fairinstitute.org/