I would like to provide a perspective on the hype surrounding AI-first/Agents and this new security arms race. While I’m a big believer and driver of LLM applications to replace aspects of manual work, I’m also skeptical of the AI-wash in ASPM and Vulnerability Management. As I’m spearheading the AI initiative here at Phoenix Security, this might all sound strange, but bear with me. Everyone wants to automate and leverage AI for efficiencies. But automation without direction is just chaos in fast-forward.

TL;DR: Don’t Let LLMs Run the Show

Note – it was fun to write a whole article and have a section of this to be prompt-engineered. It was also fun to see the LLMs commenting on themselves; I felt a bit of a philosophical moment when the LLMs were judging themselves and their use. Nonetheless, as I set out in this article, LLM/AI and agents are tools for automation and enhancement, not a replacement. This article is AI-augmented, AI corrected (grammar tools apply AI), but written by me as always.

Large Language Models (LLMs) are not silver bullets. Without proper attribution, correlation and context, they are just expensive guessing machines, churning out plausible gibberish at scale. In security, plausible without provable, is a liability.

Here’s what we’ve learned: You don’t need more vulnerabilities. You need clarity. You need to know who must fix what, where and how fast. That’s why we believe in AI-agent-second. First, you contextualize. Then, let the agents do the heavy lifting. This article breaks down our approach at Phoenix Security. It introduces three autonomous AI agents: The Researcher, The Analyzer and The Remediator — each designed to work only after vulnerabilities are fully attributed across four critical dimensions: ownership, location, exposure and business impact.

Contents

ToggleWhy “AI-First” Agents are the Wrong Starting Point

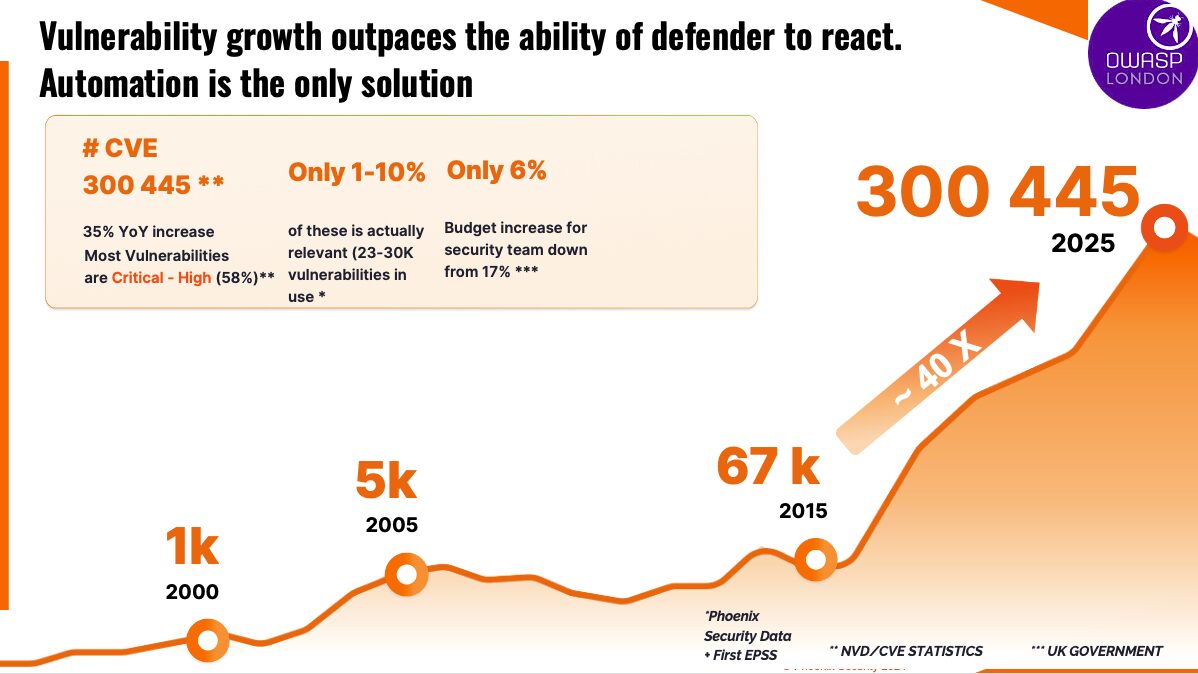

Dumping 300,000+ CVEs into an LLM and asking, “What should I do?”, is like asking a tourist for directions in a city with no street signs. You’ll get an answer that may even sound correct, but unlikely to be the right course of action.

We are observing a flood of AI-generated bug reports hitting developers. Most bugs are noise that lack evidence of exploitation, context or relevance. Maintainers waste hours triaging reports that lead nowhere.

The same mistake is happening in enterprise security. LLMs are being dropped into pipelines, silver bullets or security prophets. Except they’re not bullets and their wisdom (guidance) is often misaligned. They’re toy solutions used like Nerf darts that cost tokens and time.

We’ve all seen Security Information and Event Management (SIEMs) flooded with logs. Now imagine LLMs flooded with uncorrelated vulnerabilities. Instead, we need LLMs output to go from flooding developers, to providing context-specific streams of relevant information.

The Human Bottleneck is Real

Let’s be honest. Humans can’t keep up.

There are approximately 300K known vulnerabilities. Only about 23K matter in real-world impact. Out of those, just a fraction are urgent, exploitable or reachable. Yet most security teams spend 80%+ of their time chasing all of them equally.

Add in the reality that 41% of code today is written with AI assistance (GitHub Copilot), and we’re heading toward a world where insecure-by-default code meets AI-generated exploitation (XBOW).

And then remediation windows? Tightening. According to Cloudflare’s 2024 insights, the mean time to remediate (MTTR) exposed internet-facing vulnerabilities needs to be under 3 months—and ideally, far faster.

We can’t fix this manually. But throwing LLMs at the problem blindly won’t help either.

Context is the Compass. Agents are the Engine.

Phoenix Security takes a threat-centric and context-first approach to Application Security Posture Management (ASPM). We don’t believe in drowning teams in vulnerabilities and instead a surgical precision approach.

Phoenix Security starts with 4-dimensional risk attribution:

- Asset Ownership – Who is responsible?

- Deployment Location – Where is it deployed (cloud, container, on-prem)?

- Exposure Level – Internal, DMZ, or Internet-facing?

- Business Context – How critical is it to operations?

Only after this context is built do we deploy our agents. Look at he Complete data model.

The information in this article is also available in greater detail in the eBook – A threat-centric approach on vulnerabilities.

Download the eBook on LLM Application for a Threat-Centric Approach on Vulnerabilities

Meet the AI Agents for ASPM: The Researcher. The Analyzer. The Remediator

These are not chatbots. These are autonomous agents with clear objectives, designed to collaborate after attribution is complete.

These agents are built with a philosophy of AI second, where all the data is related, contextualized, deduplicated, and attributed to minimizing the noise, hallucination, and false positive rate. We use role-specific agents to go from detection, to response, focusing on three main pillars: Research, Analysis, and Response.

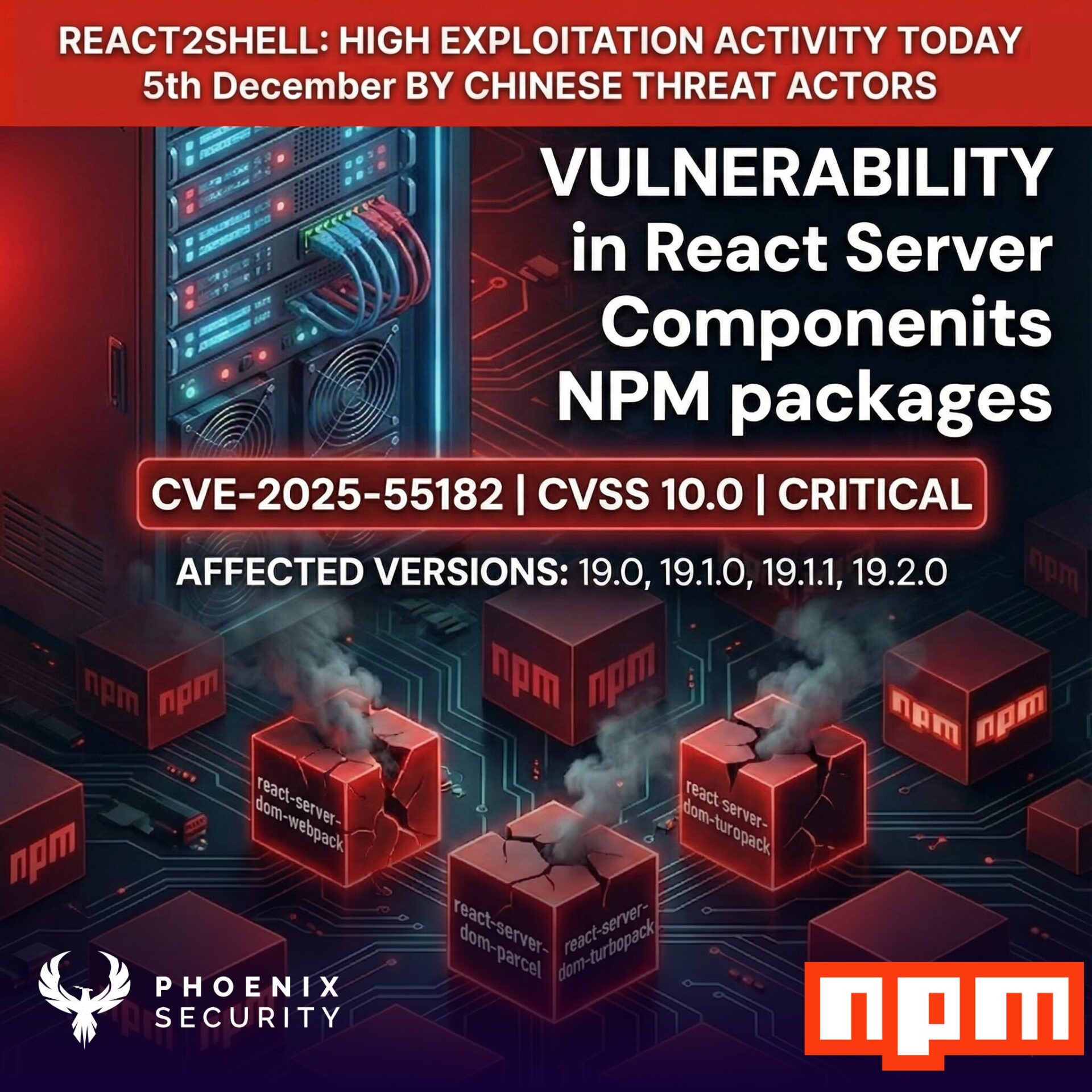

This agent scans real-time threat intel, CVE exploitation data, ransomware activity, and dark web chatter. It highlights emerging risks based on likelihood, weaponization, and trending threats.

The Researcher

This is your early warning radar. Not just this is a CVE and this CVE is blowing up across exploitation databases.

Behavior:

- Reviews the full vulnerability, work with CVE, and non-CVE base vulnerabilities;

- Self‑updates watch lists, analyzes the data on vulnerability, threat actors;

- Classifies the vulnerabilities with root causes and possible impact;

- Predicts exploitation for CVE, non-CVE based, and the likelihood of a vulnerability to be used in a ransomware attack;

- Creates a vectorial description of the vulnerability and a full description of the vulnerability, weakness, and threat actor.

This replaces hours of manual CTI review. It doesn’t guess—it watches, suggests CTI, and helps humans decide based on exposure and providing detailed contexts and how bad a vulnerability could become.

E.g. NVD description: Rockwell Automation MicroLogix 1400 Controllers and 1756 ControlLogix Communications Modules. An unauthenticated, remote threat actor could send a CIP connection request to an affected device, and, upon successful connection, send a new IP configuration to the affected device even if the controller in the system is set to Hard RUN mode. When the affected device accepts this new IP configuration, a loss of communication occurs between the device and the rest of the system as the system traffic is still attempting to communicate with the device via the overwritten IP address.

Phoenix Security: Probability of exploitation and likelihood of ransomware Medium to Low.

The Analyzer

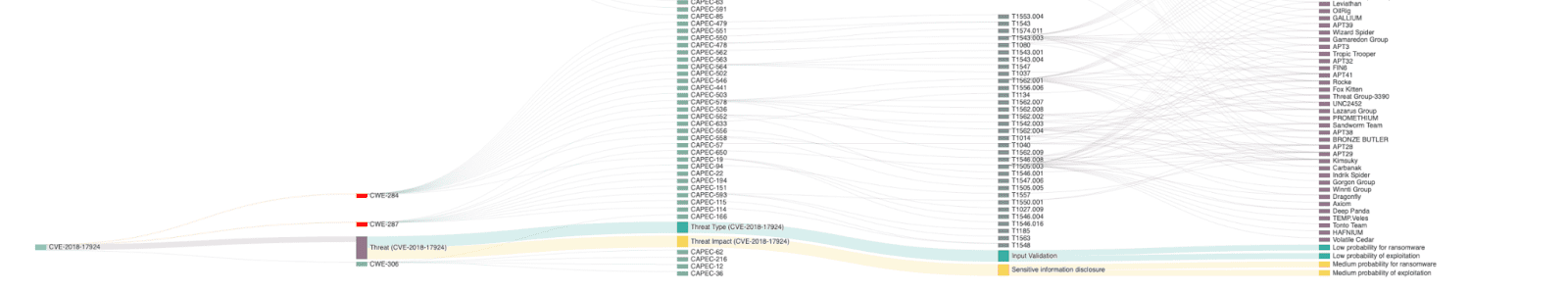

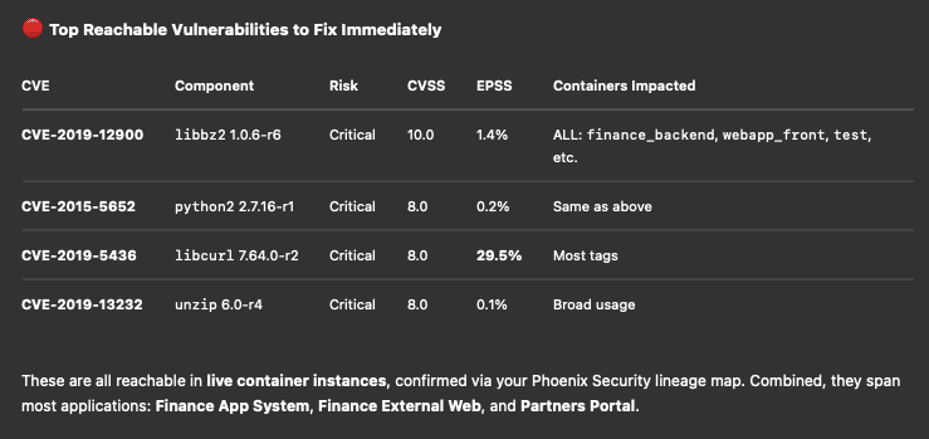

This agent contextualizes each vulnerability. It maps the attack path. It correlates threat modeling data. It tells you whether the vuln is reachable, exploitable, and chained with others.

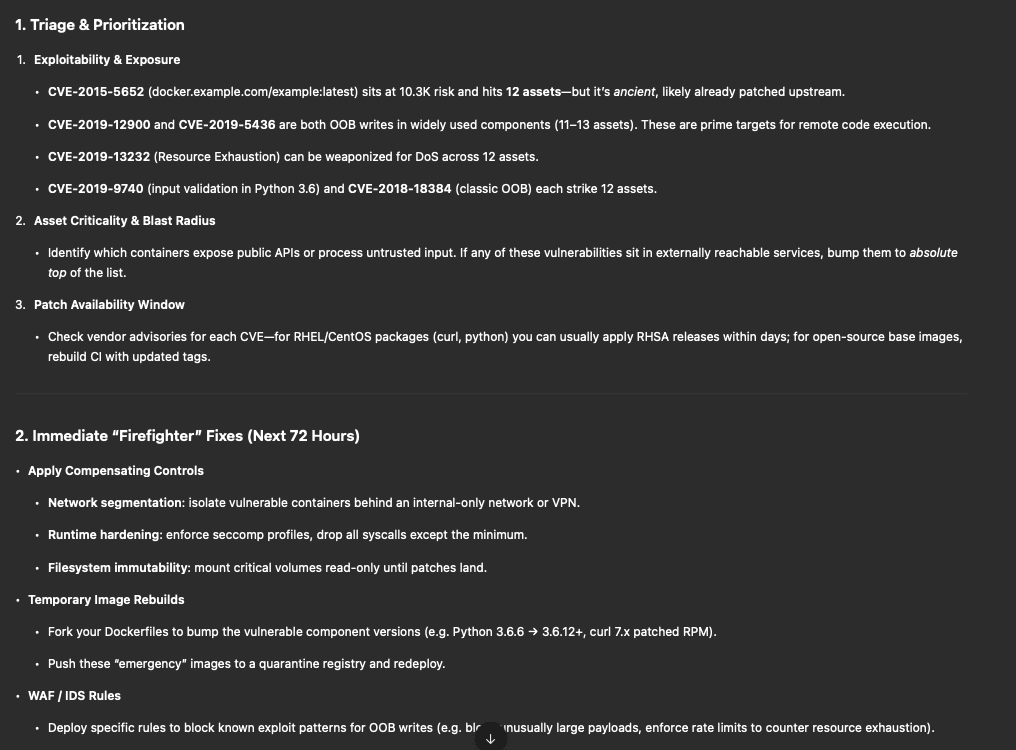

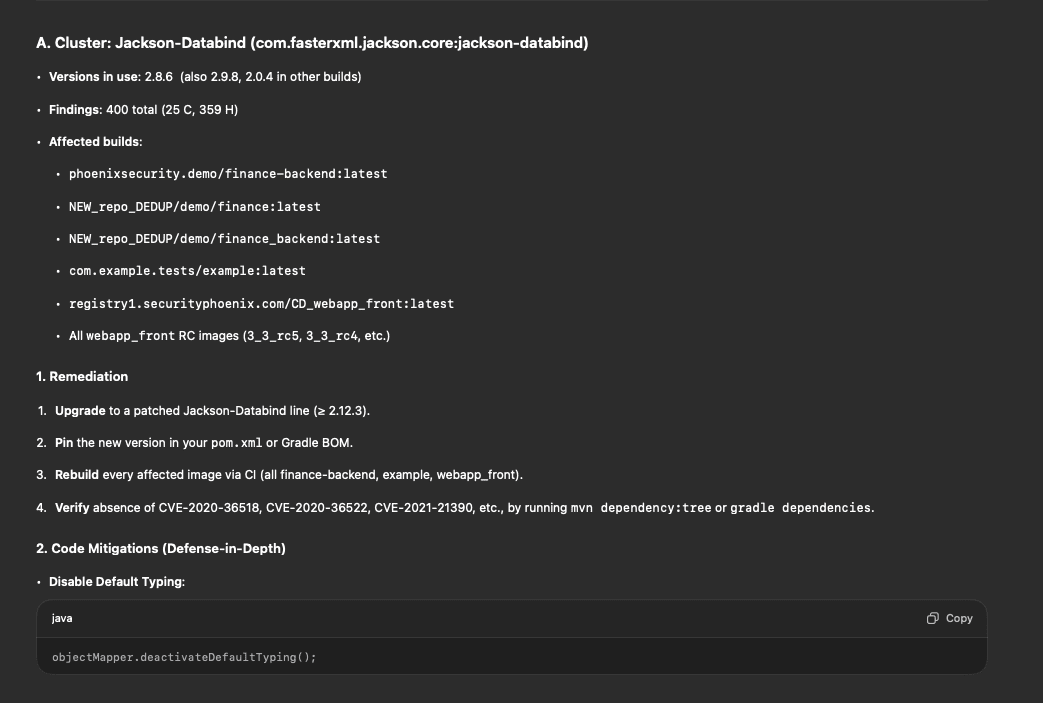

Without it, you might get: Update this container base image.

When the real fix is: Patch a specific dependency in auth-handler.js used by team X.

Behavior:

- Correlates threat attacks and the probability of exploitation based on industry and threat actors;

- Models the exploitation with TTP and MITRE & ATTACK Technique;

- Does the risk assessment of the vulnerabilities based on the context;

- Scores based on internal exposure and chaining potential;

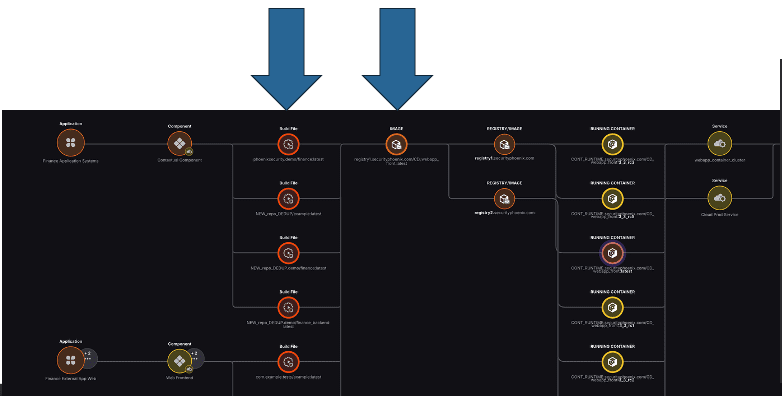

- Leverages Phoenix’s reachability engine to ignore unreachable libraries;

- Leverages Phoenix container lineage and throttling to detect which library is actually reachable and which container is actually active;

- Leverages deep context CTI and correlates all the vulnerabilities for one/multiple assets;

- Creates the aggregated description of problems to help with full synthetic but comprehensive remediation.

It moves teams from looks critical to this is your real risk. The Analyzer also act as a guiding point for non technical team members to understand and answer the question why is this bad?

The Remediator

This is your AI-powered workflow engine. It routes tickets, clusters-related vulnerabilities, and maps resolution options.

It doesn’t say: Patch all these libraries.

It says: These 12 CVEs are resolved by bumping one package. No breaking changes expected. Assign to Team X.

Behavior:

- Augments vulnerability data in campaigns and tickets and clusters in remediation groups;

- Builds dependency trees for remediation impact;

- Clusters fix into low-effort, high-impact buckets;

- Provides remediation:

Low‑complexity upgrades → Dev PRs with different suggestions;

Base‑image rebuilds → Platform team tickets

Network controls → Firewall policy scripts, WAF rules

It doesn’t replace humans. Instead, it saves humans 90% of the work associated with that task or process.

Another Example with the Contextual Remediation

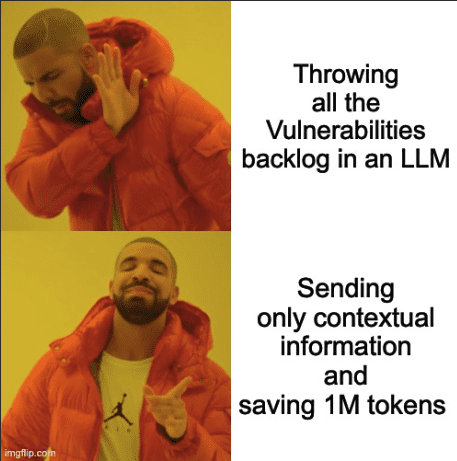

We assume the work of correlation and contextualization from Phoenix Security is done, even sending those vulnerabilities in a refined LLM like o3 from ChatGPT.

With a simple dump of the vulnerabilities (we focus on SCA), we receive just poor and generic advice:

Otherwise, giving the full context, lineage, and graph of correlation gives you this:

Based on the vulnerability graph:

The Difference Between Useful AI and Expensive Guessing

Here’s a real-world contrast:

| Scenario | Without Context | With Agentic AI |

| CVE-2023-XYZ1 | GPT suggests kernel patch | Analyzer confirms it’s not reachable on container |

| 50 critical CVEs | LLM clusters them by severity | Remediator clusters by shared fix in base image |

| “Fix Log4j” | GPT recommends scanning everything | Analyzer + Remediator: Log4j only affects one legacy repo, patch there |

Why “Garbage In, Garbage Out” Applies to AI, Too

Let’s stop wasting tokens.

Dumping uncorrelated CVEs into an LLM is like pouring 10 million logs into a SIEM with no rules. You’ll spend more time trying to understand the noise than taking action on the signals.

Worse—LLMs can hallucinate convincing but wrong remediation. Like suggesting an NGINX upgrade for a CVE in Apache. Or flagging a dev-only dependency as critical in production.

We’ve tested this. Context-free LLMs often

- Recommend unneeded base image updates;

- Flag vulnerabilities in dead code;

- Miss chainable exploits due to surface-level analysis.

From SIEM Noise to Surgical Guidance

In the same way SIEMs evolved with correlation rules and enrichment layers, ASPM must evolve with contextual agents. Otherwise, we’re back to square one. Just with a cooler UI.

Context is the difference between noise and insight. Agents are the difference between hours and minutes.

The AI-Agent-Second Philosophy

Everyone is racing to slap AI on top of their dashboards. But most miss the point.

AI is not about replacing security professionals. It’s about amplifying them. Giving them superpowers. But only when you’ve already done the hard work of attribution.

That’s why at Phoenix Security, we say:

We think differently. We think Human.

Not AI First, but AI Second: Context before prediction delivers better results driving the right fix to the right team.

In a world racing toward AI-first agents, we introduce AI-agent-second.

Real-World Use Case: 10Xing the DevSecOps Workflow

Without agents:

- Team reviews 700 tickets manually

- Context built in spreadsheets

- Ticket routing via Slack pings

- Fixes delayed 3–6 months

- No prioritization by exposure or impact

With agents:

- Only 43 tickets created

- Pre-attributed by team, system, and location

- Analyzer confirms reachability

- Researcher flags 8 active exploits

- Remediator bundles 20 issues into 3 actions

⏰ Time saved: Hundreds of hours

📊 Risk reduced: Measurable

🙂 Stress level: Lowered

Real‑World Example: Container Mayhem to Surgical Fix

Raw scan: 165 medium/critical CVEs in node‑backend:14‑alpine.

Without context LLM recommends: Upgrade to node‑backend: 20 → breaks build chain; backlog balloon bursts.

With AI‑Agent‑Second:

- Analyzer sees only 11 reachable packages.

- Researcher flags CVE‑2024‑56789 (heap‑overflow) as now exploited.

- Remediator proposes: Rebuild base image with alpine 3.18.3 + apply temporary seccomp profile.

Result: one PR, two lines in Dockerfile, released in 30 minutes.

How the Agents Collaborate—A Day in the Life

- 08:03 UTC – Researcher detects chatter about a new Citrix ADC exploit kit hitting Telegram, confidence 0.82.

- 08:04 – Analyzer cross‑checks: Citrix version 13.1 vulnerable in DMZ cluster, exploit path reaches payment API.

- 08:05 – Analyzer tags risk as CRITICAL, external, revenue‑impacting.

- 08:06 – Remediator sees patch window exceeds 4 hours; auto‑creates a Citrix responder workflow + WAF virtual patch, assigns SRE pair for after‑hours patch.

- 08:07 – Slack war‑room spun up automatically; human engineer just approves the workaround.

Total human time: <5 minutes. Without context, the LLM would have spammed “upgrade everything” and stalled the sprint.

Quantifiable Gains of Leveraging Context and Agents

| Metric | Pre‑agents | Post‑agents | Improvement |

| Mean‑time‑to‑remediate internet‑facing vulns | 19 days | <2 days | 9× |

| Engineer hours per valid ticket | 4.2 h | 0.4 h | 10.5× |

| False‑positive triage rate | 37 % | <5 % | −7× |

These results mirror Copilot’s 55 % productivity boost for coding (https://www.microsoft.com/en-us/research/publication/the-impact-of-ai-on-developer-productivity-evidence-from-github-copilot/)—but targeted at security operations.

The Environmental Angle—Tokens Aren’t Free

OpenAI estimates a 1‑B‑parameter inference burns ~0.0003 kWh. Multiply that by brute‑forcing every CVE and you light up a small data‑centre for zero actionable output. Context‑first reduces token calls by >90 %, slashing carbon and cost for both you and the environment.

DevSecOps Needs Clarity, Not Clutter

If you’re leading AppSec, you don’t need another dashboard. You need answers.

Not “what are all my CVEs?”

But: “What matters, who owns it, and how do we fix it fast?”

Agentic AI makes that happen—but only when used after contextualization.

Final Thoughts

Too many security teams are burning hours doing spreadsheet gymnastics to chase owners. Too many AI tools are just generating more plausible confusion.

The fix isn’t more data. It’s better attribution.

The power isn’t in LLMs. It’s in how you guide them.

The outcome isn’t about AI replacing people.

It’s about letting people operate like 10x teams—because the agents have their back.

If you’re ready to move from fragmented alerts to focused action, it’s time to adopt an AI-agent-second mindset.

Start with context. Then let your agents fly.

Further Reading

- https://phoenix.security/whitepapers-resources/ebook-llm-threat-centric/

- https://phoenix.security/threat-centric-agentic-ai/

- https://phoenix.security/threat-centric/

- https://phoenix.security/ai-threat-centric/

- https://blog.cloudflare.com/application-security-report-2024-update/

- https://www.microsoft.com/en-us/Investor/events/FY-2023/Morgan-Stanley-TMT-Conference

- https://www.techspot.com/news/101440-ai-generated-bug-reports-waste-time-developers.html

- https://arxiv.org/abs/2401.03315

How Phoenix Security Can Help

Organizations often face an overwhelming volume of security alerts, including false positives and duplicate vulnerabilities, which can distract from real threats. Traditional tools may overwhelm engineers with lengthy, misaligned lists that fail to reflect business objectives or the risk tolerance of product owners.

Phoenix Security offers a transformative solution through its Actionable Application Security Posture Management (ASPM), powered by AI-based Contextual Quantitative analysis and an innovative Threat Centric approach. This innovative approach correlates runtime data with code analysis and leverages the threats that are more likely to lead to zero day attacks and ransomware to deliver a single, prioritized list of vulnerabilities. This list is tailored to the specific needs of engineering teams and aligns with executive goals, reducing noise and focusing efforts on the most critical issues. Why do people talk about Phoenix

• Automated Triage: Phoenix streamlines the triage process using a customizable 4D risk formula, ensuring critical vulnerabilities are addressed promptly by the right teams.

• Contextual Deduplication: Utilizing canary token-based traceability, Phoenix accurately deduplicates and tracks vulnerabilities within application code and deployment environments, allowing teams to concentrate on genuine threats.

• Actionable Threat Intelligence: Phoenix provides real-time insights into vulnerability’ exploitability, combining runtime threat intelligence with application security data for precise risk mitigation.

By leveraging Phoenix Security, you not only unravel the potential threats but also take a significant stride in vulnerability management, ensuring your application security remains up to date and focuses on the key vulnerabilities.